2022.01.25

Coursera - Machine Learning_Andrew Ng - Week 2

Multiple features(variables)

Gradient descent for multiple variables

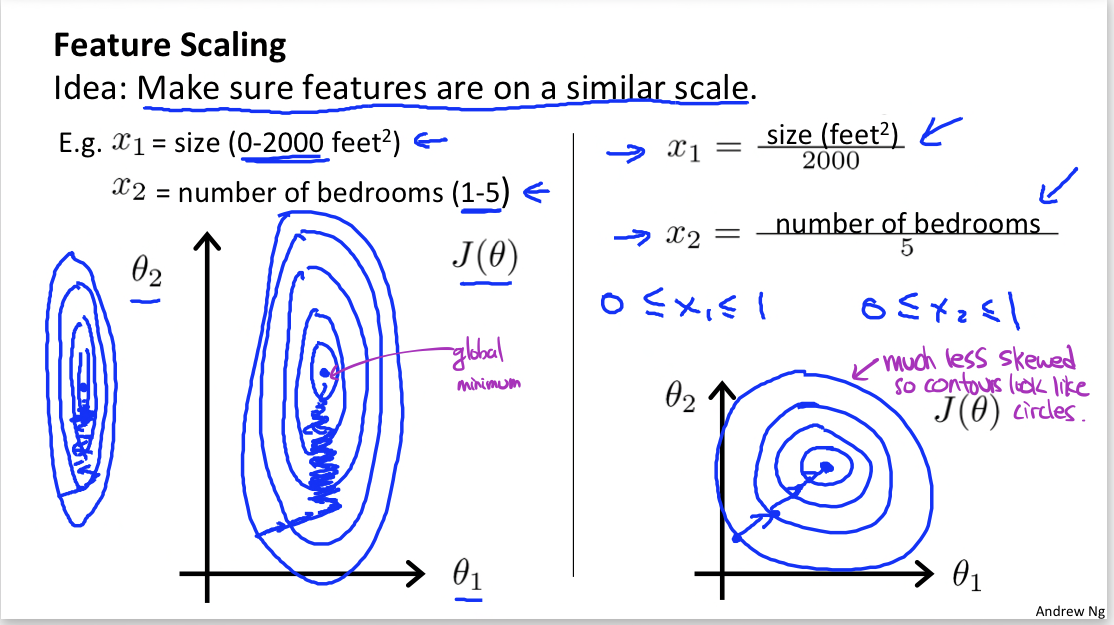

Gradient descent in practice 1: Feature Scaling

- feature scaling

: simple trick to apply → make gradients run much faster and converge in a lot fewer other iterations.

: make sure features are on a similar scale ⇒ get every features into approximately a -1<=xi<=1 range

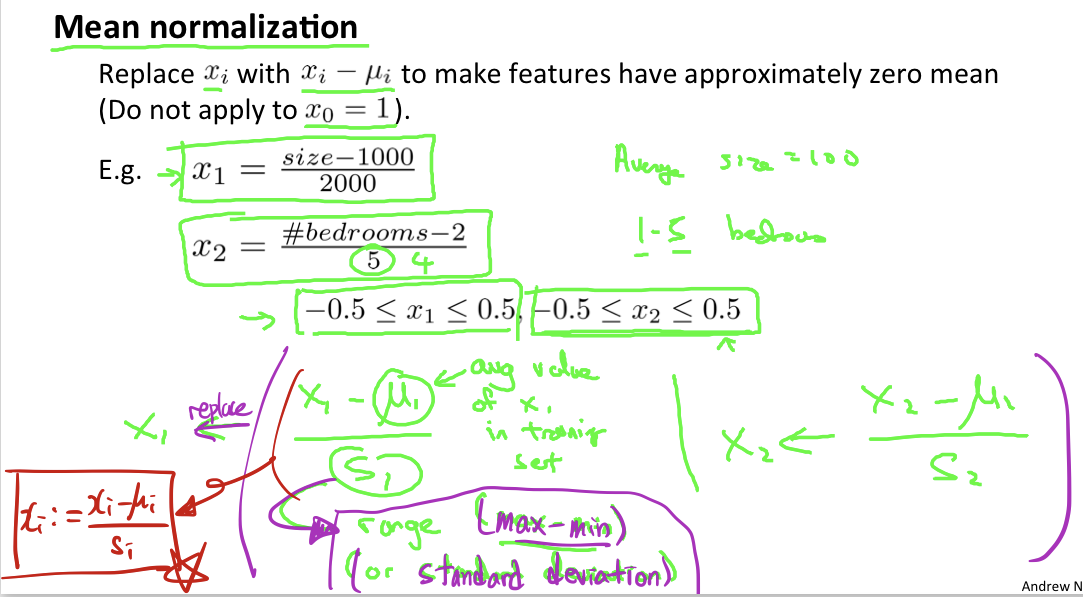

- mean normalization

Gradient Descent in practice 2: Learning rate

- Debugging: make sure gradient descent is working correctly

(use visualization using plot vs automatic convergence test)

If α is too small ⇒ slow convergence

If α is too big ⇒ J(θ) may not decrease on every iteration; may not converge

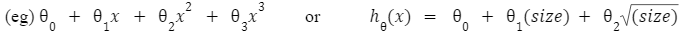

Features and Polynomial Regression

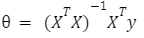

Normal Equation formula

⇒ Compare with Gradient Descent

| Gradient Descent | Normal Equation |

| needs to choose α | no need to choose α |

| needs many iterations | don't need iteratation |

| works well even when n is large | slow if n is very large + need to compute |

'AI NLP Study > Machine Learning_Andrew Ng' 카테고리의 다른 글

| Coursera - Machine Learning_Andrew Ng - Week 3 (Logical Regression) (0) | 2022.01.26 |

|---|---|

| Coursera - Machine Learning_Andrew Ng - Week 1 (0) | 2022.01.25 |